Notizie per Categorie

Articoli Recenti

- Forrester names Microsoft a Leader in the 2025 Zero Trust Platforms Wave™ report 10 Luglio 2025

- [Launched] Generally Available: Azure Automation supports PowerShell 7.4 & Python 3.10 runbooks and Runtime environment 10 Luglio 2025

- [In development] Private Preview: Enable Trusted launch on existing Virtual machine Flex scale set 10 Luglio 2025

- [Launched] Generally Available: Enable Trusted launch on existing Virtual machine Uniform scale set 10 Luglio 2025

- [In preview] Public Preview: Trusted launch default for new Gen2 VMs & Scale sets 10 Luglio 2025

- [Launched] Generally Available: Granular Role-Based Access Control (RBAC) for Azure File Sync 10 Luglio 2025

- [Launched] Generally Available: Customer controlled maintenance for Azure Firewall 9 Luglio 2025

- Microsoft expands Zero Trust workshop to cover network, SecOps, and more 9 Luglio 2025

- Enhancing Microsoft 365 security by eliminating high-privilege access 8 Luglio 2025

- What’s new in Power Apps: June 2025 Feature Update 8 Luglio 2025

New research, tooling, and partnerships for more secure AI and machine learning

Today we’re on the verge of a monumental shift in the technology landscape that will forever change the security community. AI and machine learning may embody the most consequential technology advances of our lifetime, bringing huge opportunities to build, discover, and create a better world.

Brad Smith recently pointed out that 2023 will likely mark the inflection point for AI going mainstream, the same way we think of 1995 for browsing the internet or 2007 for the smartphone revolution. And while Brad outlines some major opportunities for AI across industries, he also calls out the deep responsibility involved for those who develop these technologies. One of the biggest opportunities is also a core responsibility for us at Microsoft – building a more secure digital future. AI has the incredible potential to reshape our security landscape and protect organizations and people in ways we have been unable to do in the past.

With all of AI’s potential to empower people and organizations, it also comes with risks that the security community must address. It is imperative that we as an industry and global technology community get this journey right, and that means looking at AI with diverse perspectives, taking it slowly, working with our partners across government and industry – and sharing what we’re learning.

At Microsoft, we’ve been working on the challenges and opportunities of AI for years. Today we’re sharing some recent developments so that the community can be better informed and better equipped for a new world of AI exploration:

- New research: A dedicated AI Security Red Team within Microsoft Threat Intelligence explored how traditional software threats affect AI and how security professionals, developers, and machine learning engineers should think about securing and monitoring AI and machine learning models. This team will continue to research and test security in AI and machine learning as we learn more as a company and as an industry.

- New tools for defenders: Microsoft recently released an open-source automation tool for security testing of AI systems called Counterfit. The tool is designed to help organizations conduct AI security risk assessments and help ensure that the algorithms used in their businesses are robust, reliable, and trustworthy. As of today, our Counterfit tool will now be part of MITRE’s new Arsenal plug-in.

- Industry collaboration to help secure the AI supply chain: We worked with Hugging Face, one of the most popular machine learning model repositories, to mitigate threats to AI and machine learning frameworks by collaborating on an AI-specific security scanner. This tool will help the security community to better secure their software supply chain when it comes to AI and machine learning.

AI brings new capabilities – and familiar risks

AI and machine learning can provide remarkable efficiency gains for organizations and lift the burden from a work force overwhelmed by data.

As an example, these capabilities can be particularly helpful in cybersecurity. There are more than 1,200 brute-force password attacks per second, and according to McKinsey, many organizations have more than 100 security tools in place, each with its own portal and alerting system to be checked daily. AI will change the way we defend against threats by improving our ability to protect and respond at the speed of an attack.

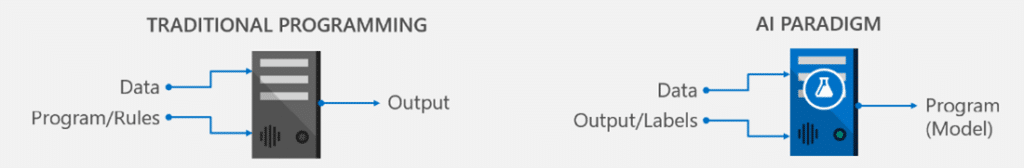

This is why AI is popular right now across industries: it provides a way to solve sophisticated problems utilizing complex data relationships merely by human labeling of input and output examples. It uses the inherent advantages of computing to lift the burden of massive data and speed our path to insights and discoveries.

But with its capabilities, AI also brings some risks that organizations may not be considering. Many businesses are pulling existing models from public AI and machine learning repositories as they work to apply AI models to their own operations. But often, either the software used to build AI systems or the AI models housed in the repositories have not been moderated. This creates the risk that anyone can put up a tampered model for consumption, which can poison any system that uses the model.

There is a misconception in the security community that attacking AI and machine learning systems involves exotic algorithms and advanced knowledge of machine learning. But while machine learning may seem like math and magic, at the core it runs on bits and bytes, and like all software, it can be vulnerable to security issues.

Within the Microsoft Threat Intelligence team, we have a group that focuses on understanding these risks. The AI Security Red Team is an interdisciplinary group of security researchers, machine learning engineers, and software engineers whose goal is to proactively identify failure points in AI systems and help remediate them. The AI Security Red Team works to see how attackers approach AI and how they might be able to compromise an AI or machine learning model, so we can understand those attacks and how to get ahead of them.

The research: Old threats take on new life with AI

Recently the AI Security Red Team investigated how easy it would be for an attacker to inject malicious code into AI and machine learning model repositories. Their central question was, how can an adversary with current-day, traditional hacking skills cause harm to AI systems? This question led us to prove that traditional software attack vectors can indeed be a threat.

The security community has long known about Python serialization threats, but not in the context of AI systems. Academic researchers have warned about the lack of security practices in machine learning software. Recently, there has been a wave of research (for example, here, here, and here) looking at serialization threats specifically in the context of machine learning. MITRE ATLAS, the ATT&CK-style framework for adversarial machine learning, specifically calls out machine learning supply chain compromise. Even AI frameworks’ security documentation explicitly points out that machine learning model files are designed to store generic programs.

What has been less clear is how far attackers could take this, which is what the Microsoft AI Security Red Team explored. The AI Security Red Team routinely emulates a range of adversaries, from script kiddies to advanced attackers, to understand attack vectors against AI and machine systems. To answer our question, we assumed the role of an adversary whose goal is to compromise machine learning systems using only traditional hacking tools and methodology. In other words, our adversary knew nothing about specifically hacking AI.

Our exercise allowed us to assess the impact of poor encryption in machine learning endpoints, improperly configured machine learning workspaces and environments, and overly broad permissions in the storage accounts containing the machine learning model and training data – all of which can be thought of as traditional software threats.

The team found that these traditional software threats can be particularly impactful in the context of AI systems. We looked at two of the AI frameworks most widely used by machine learning engineers and data scientists. These frameworks provide a convenient way to write mathematical expressions to transform data into the required format before running it through an algorithm. The team was able to repurpose one such function, Keras Lambda layer, to inject arbitrary code.

The security community is aware of how Python’s pickle module, which is used for serialization and deserialization of a python object, can be abused by adversaries. Our work, however, shows that machine learning model file formats, which may not use pickle format, are still flexible enough to store generic programs and can be abused. This also reduces the number of steps the adversary needs to include a backdoor in a model released to the internet or a popular repository.

In our proof of concept, we were able to repurpose the mathematical expression processing function to load malware. An added advantage to the adversary: the attack is self-contained and stealthy; it does not require loading extra custom code prior to loading the model itself.

New tools with Counterfit, CALDERA, and ATLAS

In security, we are constantly investing and innovating to learn about attacker behaviors and bring that human-led intelligence to our products. Our mission is to combine the diversity of thinking and experience from our threat hunters and companies we’ve integrated with (like RiskIQ and CyberX), so our customers can benefit from both hyper-scale threat intelligence as well as AI.

With our announcement today that Microsoft Counterfit is now integrated into MITRE CALDERA, security professionals can now build threat profiles to probe how an adversary can attack AI systems both via traditional methods as well as through novel machine learning techniques.

This new tool integration brings together Microsoft Counterfit, MITRE CALDERA (the de facto tool for adversary emulation), and MITRE ATLAS to help security practitioners better understand threats to ML systems. This will enable security teams to proactively look for weaknesses in AI and machine learning models and fix them before an attacker can take advantage. Now security professionals can get a holistic and automated security assessment of their AI systems using a tool that they are already familiar with.

“With the rise in real world attacks on machine learning systems that we’ve seen through the MITRE ATLAS collaboration, it’s more important than ever to create actionable tools for security professionals to prepare for these growing threats across the globe. We are thrilled to release a new adversary emulation tool, Arsenal, in partnership with Microsoft and their Counterfit team. These open-sourced tools will enhance the ability of security professionals and ML engineers across the community to test the vulnerability of their ML models through the MITRE CALDERA tools they already know and love.”

Doug Robbins, VP Engineering & Prototyping, MITRE

Investment and innovation with partners

In theory, once a machine learning model is embedded with malware, it can be posted in popular ML hosting repositories for anyone to download. An unsuspecting ML engineer could then download the backdoored ML model, which could lead to the adversary gaining foothold into the organization environment.

To help prevent this, we worked with Hugging Face, one of the most popular ML model repositories, to mitigate such threats by collaborating on an AI-specific security scanner.

We also recommend Software Bill of Materials (SBOM) for AI systems. We have amended the package URL (purl) specification to include Hugging Face, as well as MLFlow. Software Package Data Exchange (SPDX) and CycloneDX, the leading SBOM standards which leverage purl spec, allow tracking of ML models. Now any Azure ML, Databricks, or Hugging Face user leveraging Microsoft’s recommended SBOM will have the option to track ML models as part of supply chain security.

Threat Intelligence in this space will continue to be a team sport, which is why we have partnered with MITRE and 11 other organizations to empower security professionals to track these novel forms of attack via the MITRE ATLAS initiative.

Given we distribute hundreds of millions of ML models every month, corrupted artifacts can cause great harm as well as damage the trust in the open-source community. This is why we at Hugging Face actively develop tools to empower users of our platform to secure their artefacts, and greatly appreciate Microsoft’s community contributions in advancing the security of ML models.

Luc Georges, ML Engineer, Hugging Face

It’s imperative that we as an industry and global technology community are thoughtful and diligent in our approach to securing AI and machine learning systems. At Microsoft, this is core to our focus on AI and our security culture. Because of the nature of emerging technology, in that it’s exactly that – emerging – there are many unknowns. In security, we are constantly investing and innovating to learn about attacker behaviors and bring that human-led intelligence to our products.

The reason we invest in research, tools and industry partnerships like those we’re announcing today is so we can understand the nature of what those attacks would entail, do our best to get ahead of them, and help others in the security community do the same. There is still so much to learn about AI, and we are continuously investing across our platforms and in red-team like research to learn about this technology and to help inform how it will be integrated into our platform and products.

Recommendations and resources

The following recommendations for security professionals can help minimize the risks for AI and ML systems:

- Encourage ML engineers to inventory, track and update ML models by leveraging model registries. This will help with keeping track of the models in an organization and their software dependencies.

- Apply existing security best practices to AI systems. This includes sandboxing the environment running ML models via containers and machine virtualization, network monitoring, and firewalls. We have outlined guidance here to get started. By doing this, we treat AI assets as yet another crown jewel that security teams should protect from adversaries.

- Leverage MITRE ATLAS to understand threats to AI systems, and emulate them using Microsoft Counterfit via MITRE CALDERA. This will help security analysts ground their effort in a realistic, numbers-driven approach to protecting AI systems.

This proof of concept that we pursued is part of broader investment at Microsoft to empower the wide range of stakeholders who play an important role to securely develop and deploy AI systems:

- For security analysts to orient themselves with threats against AI systems, Microsoft, in collaboration with MITRE, released an ATT&CK-style framework Adversarial ML Threat Matrix, complete with case studies of attacks on production machine learning systems, which has evolved into MITRE ATLAS.

- For security professionals, Microsoft open-sourced Counterfit to help with assessing the posture of AI systems.

- For security incident responders, we released a bug bar to systematically triage attacks on ML systems.

- For ML engineers, we released a checklist to complete AI risk assessment.

- For developers, we released threat modeling guidance specifically for ML systems.

- For engineers and policymakers, Microsoft, in collaboration with Berkman Klein Center at Harvard University, released a taxonomy documenting various machine learning failure modes.

- For the broader security community, Microsoft hosted the annual Machine Learning Evasion Competition.

- For Azure machine learning customers, we provided guidance on enterprise security and governance.

Contributors: Ram Shankar Siva Kumar with Gary Lopez Munoz, Matthieu Maitre, Amanda Minnich, Shiven Chawla, Raja Sekhar Rao Dheekonda, Lu Zhang, Charlotte Siska, Sudipto Rakshit.

The post New research, tooling, and partnerships for more secure AI and machine learning appeared first on Microsoft Security Blog.

Source: Microsoft Security